NTSC VIDEO – COMPOSITE VIDEO AND S-VIDEO

In the first few years of the 21st century there was a major change to television. The old broadcast standard for analog television was replaced by a digital standard, called digital ATSC. This old standard, which has been know by the name NTSC, had been in use for over 60 years, since it was first developed in 1941. The only major change to it was adopted in 1953, adding color television capability.

There were several reasons for the change, chief amongst them being the development of High-Definition Television. Besides being analog, the old analog NTSC standard couldn’t handle the amount of data that HDTV requires. To provide the greater data density requirement, the ATSC standard had to go digital.

While the new standard has eliminated analog television broadcast entirely in most countries, that doesn’t mean that NTSC is dead. There are still a lot of local systems that use NTSC, such as CCTV (closed-circuit television) system. There is also an incredibly large library of recordings that have been done over the last 100 years, which are in NTSC format. Converting all of these to the new standard would be impractical.

NTSC SPECIFICATIONS

There are a large number of details involved in the NTSC standard, just as with any specification; but the major two are resolution and frame rate. These are the two things that limited television picture quality for those 60 plus years. In turn, they were established based upon limitations to the technology that was available for use in televisions back when the NTSC standard was written.

We must remember that early televisions were tube devices, rather than transistors or today’s integrated circuits. While tubes operate much faster than you and I, when we compare them to the speed of modern computer electronics, they are extremely slow acting. That limitation became one of the major limiting factors in the creation of the NTSC standard.

The other limitation was the cycle time used for AC current. Since it was operating at 60 Hz (cycles per second), that speed became important. The AC motors in the early cameras keyed off of that to determine their speed. Therefore, a frame rate of 30 frames per second became the standard. Actually, the frame rate is 29.97 frames per second, although everyone refers to it as being 30 frames per second. This rate is necessary to match the speed of the camera to the speed of the current.

Film cameras, such as those used in Hollywood in previous years, record at 24 frames per second, rather than the 30 frames per second that is used for broadcast television. Converting from one to the other became a complicated exercise, which involved dividing the video field into different parts. For this reason, the video signal was interlaced; which means that the image wasn’t painted on the screen from top to bottom, but rather it was painted in alternating rows, where the odd numbered rows were painted first, then the even numbered rows.

Today, we refer to how many pixels of information there is in an image. NTSC was based upon lines of information, rather than pixels. However, in either case, the actual information broadcast is always about individual pixels. Both analog video and digital video consist of a large number of pixels or dots of color.

NTSC uses 525 scan lines, or lines of pixels. Of those, 483 make up the visible image. Today, we refer to this as 480i. The rest of the lines are used to carry information needed to synchronize the image. By comparison, 1080i HTDV has 1080 vertical pixels of information. That’s over twice as many, providing a much sharper image, especially when shown on a large screen television.

In the first few years of the 21st century there was a major change to television. The old broadcast standard for analog television was replaced by a digital standard, called digital ATSC. This old standard, which has been know by the name NTSC, had been in use for over 60 years, since it was first developed in 1941. The only major change to it was adopted in 1953, adding color television capability.

There were several reasons for the change, chief amongst them being the development of High-Definition Television. Besides being analog, the old analog NTSC standard couldn’t handle the amount of data that HDTV requires. To provide the greater data density requirement, the ATSC standard had to go digital.

While the new standard has eliminated analog television broadcast entirely in most countries, that doesn’t mean that NTSC is dead. There are still a lot of local systems that use NTSC, such as CCTV (closed-circuit television) system. There is also an incredibly large library of recordings that have been done over the last 100 years, which are in NTSC format. Converting all of these to the new standard would be impractical.

NTSC SPECIFICATIONS

There are a large number of details involved in the NTSC standard, just as with any specification; but the major two are resolution and frame rate. These are the two things that limited television picture quality for those 60 plus years. In turn, they were established based upon limitations to the technology that was available for use in televisions back when the NTSC standard was written.

We must remember that early televisions were tube devices, rather than transistors or today’s integrated circuits. While tubes operate much faster than you and I, when we compare them to the speed of modern computer electronics, they are extremely slow acting. That limitation became one of the major limiting factors in the creation of the NTSC standard.

The other limitation was the cycle time used for AC current. Since it was operating at 60 Hz (cycles per second), that speed became important. The AC motors in the early cameras keyed off of that to determine their speed. Therefore, a frame rate of 30 frames per second became the standard. Actually, the frame rate is 29.97 frames per second, although everyone refers to it as being 30 frames per second. This rate is necessary to match the speed of the camera to the speed of the current.

Film cameras, such as those used in Hollywood in previous years, record at 24 frames per second, rather than the 30 frames per second that is used for broadcast television. Converting from one to the other became a complicated exercise, which involved dividing the video field into different parts. For this reason, the video signal was interlaced; which means that the image wasn’t painted on the screen from top to bottom, but rather it was painted in alternating rows, where the odd numbered rows were painted first, then the even numbered rows.

Today, we refer to how many pixels of information there is in an image. NTSC was based upon lines of information, rather than pixels. However, in either case, the actual information broadcast is always about individual pixels. Both analog video and digital video consist of a large number of pixels or dots of color.

NTSC uses 525 scan lines, or lines of pixels. Of those, 483 make up the visible image. Today, we refer to this as 480i. The rest of the lines are used to carry information needed to synchronize the image. By comparison, 1080i HTDV has 1080 vertical pixels of information. That’s over twice as many, providing a much sharper image, especially when shown on a large screen television.

Color was added to the NTSC broadcast standard in 1953, by defining the colorimetric values used for broadcast TV. These were the numerical equivalents for standard colors, which in turn defined the entire visible color spectrum.

NTSC television signals are actually broken down into four parts:

NTSC Signal Components

Separating the chroma and luminance from each other allows the signal to be transmitted more clearly, especially considering the limitations of older equipment.

COMPOSITE VIDEO

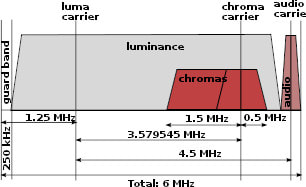

A composite video signal combines all of the above mentioned video information onto one single transmission line. This requires that the receiving television set decode the information, splitting the analog signal into its various components. This is a fairly complicated process, but relies on the basis that each of the parts of the signal is operating on a separate carrier frequency.

The carrier frequency is the base frequency that the signal is built around. In other words, you create a sign wave at a particular frequency, for the luminance part of the NTSC signal, that’s 1.25 MHz. Then you add that to the analog signal for the luminance of the image. The result will be that 1.25 MHz becomes the “zero” point for the signal. Later, when the information needs to be decoded, another 1.25 MHz signal is stripped out, returning the signal back to normal.

This allows several different signals to be “multiplexed” onto the same wire. The limitation of how many signals can be added to the same wire becomes the frequency range of the different signals. In other words, each carrier signal needs to be far enough apart that the maximum and minimum frequencies overlaid onto that carrier signal won’t reach the point where they are interfering with the signals on other carrier waves. Since analog video signals are so complex, the carrier waves need to be far apart.

NTSC television signals are actually broken down into four parts:

NTSC Signal Components

- Chroma – color

- Luminance – brightness

- Audio – the sound track (mono)

- Control signals

Separating the chroma and luminance from each other allows the signal to be transmitted more clearly, especially considering the limitations of older equipment.

COMPOSITE VIDEO

A composite video signal combines all of the above mentioned video information onto one single transmission line. This requires that the receiving television set decode the information, splitting the analog signal into its various components. This is a fairly complicated process, but relies on the basis that each of the parts of the signal is operating on a separate carrier frequency.

The carrier frequency is the base frequency that the signal is built around. In other words, you create a sign wave at a particular frequency, for the luminance part of the NTSC signal, that’s 1.25 MHz. Then you add that to the analog signal for the luminance of the image. The result will be that 1.25 MHz becomes the “zero” point for the signal. Later, when the information needs to be decoded, another 1.25 MHz signal is stripped out, returning the signal back to normal.

This allows several different signals to be “multiplexed” onto the same wire. The limitation of how many signals can be added to the same wire becomes the frequency range of the different signals. In other words, each carrier signal needs to be far enough apart that the maximum and minimum frequencies overlaid onto that carrier signal won’t reach the point where they are interfering with the signals on other carrier waves. Since analog video signals are so complex, the carrier waves need to be far apart.

S-VIDEO

S-Video connector

S-Video stands for “separate video” although many people think it refers to “super-video.” This uses a four pin, keyed, mini-DIN connector. The two pins on the left side (as shown in the image) carry the luminance signal and the two on the right carry the chroma signal.

Dividing the signal in this manner allows for a better quality video image. While the technology for multiplexing and then splitting out the video signal are old technology, which is well established, keeping the two parts of the signal separate provides a cleaner signal, which can produce a better image.

S-Video connector

S-Video stands for “separate video” although many people think it refers to “super-video.” This uses a four pin, keyed, mini-DIN connector. The two pins on the left side (as shown in the image) carry the luminance signal and the two on the right carry the chroma signal.

Dividing the signal in this manner allows for a better quality video image. While the technology for multiplexing and then splitting out the video signal are old technology, which is well established, keeping the two parts of the signal separate provides a cleaner signal, which can produce a better image.