Converting VGA to HDMI

I remember playing around with electronic devices as a kid. Granted, we didn’t have as much to play with back then, but I’ve always been fascinated by electronic gadgets. I’d take things apart and put them back together again. I’d connect different things together to see what would happen; often to the detriment of the things I’d connected together. I guess that’s how I ended up being an engineer.

My son followed in my footsteps, at least as far as the curiosity was concerned. He didn’t become an engineer, but it sure looked like he was headed that way. Any time anything broke in the house, he’d want to take it apart and see what was inside. He had a whole box of components; circuit boards, cables, motors, buttons and lights he’d scavenged from different things. He’d hook them together, just to see what would happen. Of course, what usually happened was a flash and a spark, followed by the faint smell of smoke.

Somehow, we all seem to have this same idea, that we can just hook things together and expect them to work. While some of us learn that it isn’t quite that easy, there are others who keep on trying. If you don’t believe me, just look for adapters on e-Bay sometime. You can find adapter cables to connect just about any two devices that exist. That doesn’t necessarily mean they’ll work; but somebody will be selling them.

What keeps those adapter cables from working is that often the information being transmitted over the cables isn’t the same kind. What I mean by that is that the information which one device uses and the information that another device uses isn’t the same.

My son followed in my footsteps, at least as far as the curiosity was concerned. He didn’t become an engineer, but it sure looked like he was headed that way. Any time anything broke in the house, he’d want to take it apart and see what was inside. He had a whole box of components; circuit boards, cables, motors, buttons and lights he’d scavenged from different things. He’d hook them together, just to see what would happen. Of course, what usually happened was a flash and a spark, followed by the faint smell of smoke.

Somehow, we all seem to have this same idea, that we can just hook things together and expect them to work. While some of us learn that it isn’t quite that easy, there are others who keep on trying. If you don’t believe me, just look for adapters on e-Bay sometime. You can find adapter cables to connect just about any two devices that exist. That doesn’t necessarily mean they’ll work; but somebody will be selling them.

What keeps those adapter cables from working is that often the information being transmitted over the cables isn’t the same kind. What I mean by that is that the information which one device uses and the information that another device uses isn’t the same.

VGA Connectors

HDMI Connectors

Take VGA (video graphics array) and HDMI (high-definition multimedia interface) as an example. Since both of these are for video, it would seem logical that one should be able to connect them together, just by making a cable with the right connectors on both ends. However, nothing is farther from the truth. VGA and HDMI are extremely different systems, that don’t even talk the same language, even though they are both talking about video.

VGA was created as a video standard for computer monitors in 1987. By today’s standards, that’s just about ancient technology. On the other hand, HDMI was created in 2002, making it the most current video connection option available.

So, What’s the Difference Between VGA and HDMI?

Actually, there are lots of differences between VGA and HDMI. The first and probably most important is that VGA uses an analog signal and HDMI uses a digital one. Due to that, running a VGA signal directly into a HDMI connector on a monitor could actually damage it, because the analog signals is at a higher voltage level.

VGA was created as a video standard for computer monitors in 1987. By today’s standards, that’s just about ancient technology. On the other hand, HDMI was created in 2002, making it the most current video connection option available.

So, What’s the Difference Between VGA and HDMI?

Actually, there are lots of differences between VGA and HDMI. The first and probably most important is that VGA uses an analog signal and HDMI uses a digital one. Due to that, running a VGA signal directly into a HDMI connector on a monitor could actually damage it, because the analog signals is at a higher voltage level.

The second difference between VGA and HDMI is that VGA is just video, while HDMI contains channels for both video and stereo audio. So, when you connect them together, you need to bring the audio signal from the device which has the VGA connecter, via a separate cable, and connect it to the HDMI connector.

Another difference is that the wires in a VGA connector and the wires in a HDMI connector don’t match up. You can’t just connect the wire from pin #1 from one to pin #1 on the other and expect it to work, which is what those adapter cables do. In fact, you can’t connect the wire from pin #1 on the VGA connector to any of the pins on the HDMI connector, because they aren’t the same kind of signal.

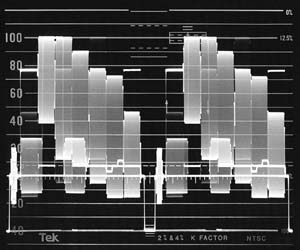

Of course, the other big difference between the two is the native resolution between VGA and HDMI. When we use the term “native resolution” we’re talking about the actual number of rows and columns of pixels (dots of color) which appear on the screen. Many types of video equipment, from computer cards to monitors to video projectors can receive signals that are a higher or lower resolution, but it will always be presented in that native resolution.

Originally, VGA was created with four different graphics modes:

640 x 480 pixels in 16 colors

640 x 350 pixels in 16 colors

320 x 200 pixels in 16 colors

320 x 200 pixels in 256 colors

That may not seem like much, but the predecessor to VGA was CGA, which only had 320 x 200 pixels. So, stepping up to 640 x 480 was a great improvement. Since then many newer forms of VGA have been developed including:

SVGA (super VGA) which is 800 x 600 pixels

XGA (extended graphics array) which is 1024 x 768 pixels

WXGA (wide extended graphics array) which is typically 1280 x 768 or 1280 x 800 pixels, allowing for wide-screen monitors for movies

All of these different formats (and there are lot more than this) are still considered part of the VGA family. They all use the same D-subminiature connector and any sending device with this VGA connector can be connected to any display device which has a VGA D-subminiature connector.

HDMI, which was developed predominantly for use with HDTV, comes in two basic flavors:

720p, which has 720 horizontal lines of pixels, making the actual resolution 1280 x 720

1080p, which has 1080 horizontal lines of pixels, making the actual resolution 1920 x 1080

As you can see, WXGA and some of the even newer, larger VGA formats have as much resolution as HDMI does, but those aren’t common. Most VGA outputs today are SVGA or XGA, with their lower resolution.

When a monitor can’t display as many lines of pixels as is being presented to it, it either cuts off the edges of the image, or more commonly cuts out some of the rows and columns of pixels, allowing the image to be presented in a way that appears correct. If the monitor has the capability of displaying more lines of pixels than what is being presented to it, it duplicates rows and columns of pixels, making the image larger.

This process of changing the number of lines of resolution from an input signal is called “scaling.” The word scaling essentially means to make it appear the same, while changing the size. Since HDTV has more lines of resolution than a typical VGA signal, it is necessary to scale the signal at the same time it is being converted from analog to digital.

So, How do We get From VGA to HDMI?

It’s clear that a number of different things have to happen, all at the same time, to convert a VGA signal to HDMI; things that require more than connecting the two together. To do this, it is necessary to pass the VGA signal through a converter, which will take the VGA analog video signal and the stereo audio signals and convert them into digital signals which can then be sent out across a HDMI cable for connection to a monitor with a HDMI connector.

These converters “read” the analog signals being sent into them from your computer or other device, then create signals in digital which can be understood by your HDMI monitor. This process happens seamlessly, without any other input from you, other than connecting the device.

At the same time that these devices are converting the signal from analog to digital and from one format to another, they are scaling the image to fit the size and format of the monitor. This can also include the necessary scaling to accommodate widescreen monitor resolutions.

You must be careful when selecting one of these converters to insure that the device has the actual input connectors that you need. Some VGA to HDMI converters have what is known as a composite video input (a round, yellow “RCA” connector), others have a component video input (the three different colored “RCA” connectors), in either case, they’re trying to convert to a digital HDMI output. Although those input types aren’t technically “VGA” they are often referred to as VGA to HDMI converters.

VGA to HDMI converters can vary extensively, the major differences are:

Type or types of input connectors accepted (VGA, composite video, component video or S-video)

Native resolution accepted

The aspect ratio(s) that the converter can work with (standard 4:3 and widescreen 16:9)

Whether or not the converter also includes a switcher to allow for multiple inputs (multiple connections doesn’t automatically mean that it can switch between them)

Whether or not it has a DVI output connector as well (DVI has the same signal characteristics as the video part of HDMI, without the audio, in a different connector package)

Output resolution, 480i, 720p and/or 1080p

Power source

Whether or not the adaptor has TOSLINK optical digital audio connection capability

Whether the settings on the converter are purely automatic, manual, or automatic with manual override capability

Another difference is that the wires in a VGA connector and the wires in a HDMI connector don’t match up. You can’t just connect the wire from pin #1 from one to pin #1 on the other and expect it to work, which is what those adapter cables do. In fact, you can’t connect the wire from pin #1 on the VGA connector to any of the pins on the HDMI connector, because they aren’t the same kind of signal.

Of course, the other big difference between the two is the native resolution between VGA and HDMI. When we use the term “native resolution” we’re talking about the actual number of rows and columns of pixels (dots of color) which appear on the screen. Many types of video equipment, from computer cards to monitors to video projectors can receive signals that are a higher or lower resolution, but it will always be presented in that native resolution.

Originally, VGA was created with four different graphics modes:

640 x 480 pixels in 16 colors

640 x 350 pixels in 16 colors

320 x 200 pixels in 16 colors

320 x 200 pixels in 256 colors

That may not seem like much, but the predecessor to VGA was CGA, which only had 320 x 200 pixels. So, stepping up to 640 x 480 was a great improvement. Since then many newer forms of VGA have been developed including:

SVGA (super VGA) which is 800 x 600 pixels

XGA (extended graphics array) which is 1024 x 768 pixels

WXGA (wide extended graphics array) which is typically 1280 x 768 or 1280 x 800 pixels, allowing for wide-screen monitors for movies

All of these different formats (and there are lot more than this) are still considered part of the VGA family. They all use the same D-subminiature connector and any sending device with this VGA connector can be connected to any display device which has a VGA D-subminiature connector.

HDMI, which was developed predominantly for use with HDTV, comes in two basic flavors:

720p, which has 720 horizontal lines of pixels, making the actual resolution 1280 x 720

1080p, which has 1080 horizontal lines of pixels, making the actual resolution 1920 x 1080

As you can see, WXGA and some of the even newer, larger VGA formats have as much resolution as HDMI does, but those aren’t common. Most VGA outputs today are SVGA or XGA, with their lower resolution.

When a monitor can’t display as many lines of pixels as is being presented to it, it either cuts off the edges of the image, or more commonly cuts out some of the rows and columns of pixels, allowing the image to be presented in a way that appears correct. If the monitor has the capability of displaying more lines of pixels than what is being presented to it, it duplicates rows and columns of pixels, making the image larger.

This process of changing the number of lines of resolution from an input signal is called “scaling.” The word scaling essentially means to make it appear the same, while changing the size. Since HDTV has more lines of resolution than a typical VGA signal, it is necessary to scale the signal at the same time it is being converted from analog to digital.

So, How do We get From VGA to HDMI?

It’s clear that a number of different things have to happen, all at the same time, to convert a VGA signal to HDMI; things that require more than connecting the two together. To do this, it is necessary to pass the VGA signal through a converter, which will take the VGA analog video signal and the stereo audio signals and convert them into digital signals which can then be sent out across a HDMI cable for connection to a monitor with a HDMI connector.

These converters “read” the analog signals being sent into them from your computer or other device, then create signals in digital which can be understood by your HDMI monitor. This process happens seamlessly, without any other input from you, other than connecting the device.

At the same time that these devices are converting the signal from analog to digital and from one format to another, they are scaling the image to fit the size and format of the monitor. This can also include the necessary scaling to accommodate widescreen monitor resolutions.

You must be careful when selecting one of these converters to insure that the device has the actual input connectors that you need. Some VGA to HDMI converters have what is known as a composite video input (a round, yellow “RCA” connector), others have a component video input (the three different colored “RCA” connectors), in either case, they’re trying to convert to a digital HDMI output. Although those input types aren’t technically “VGA” they are often referred to as VGA to HDMI converters.

VGA to HDMI converters can vary extensively, the major differences are:

Type or types of input connectors accepted (VGA, composite video, component video or S-video)

Native resolution accepted

The aspect ratio(s) that the converter can work with (standard 4:3 and widescreen 16:9)

Whether or not the converter also includes a switcher to allow for multiple inputs (multiple connections doesn’t automatically mean that it can switch between them)

Whether or not it has a DVI output connector as well (DVI has the same signal characteristics as the video part of HDMI, without the audio, in a different connector package)

Output resolution, 480i, 720p and/or 1080p

Power source

Whether or not the adaptor has TOSLINK optical digital audio connection capability

Whether the settings on the converter are purely automatic, manual, or automatic with manual override capability